Almost the entire scientific community has held for hundreds of years that for every effect, there must have been a cause. Another way of saying this is cause precedes effect. For example, if you hit a nail with a hammer (the cause), you can drive it deeper into the wood (the effect). However, some recent experiments are challenging that belief. We are discovering that what you do after an experiment can influence what occurred at the beginning of the experiment. This would be the equivalent of the nail going deeper into the wood prior to it being hit by the hammer. This is termed reversed causality. Although, there are numerous new experiments that illustrate reverse causality, science has been struggling with a classical experiment called the “double-slit” that illustrates reverse causality for well over half a century.

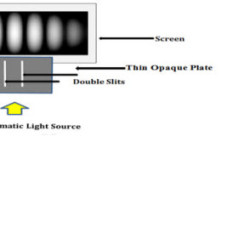

There are numerous versions of the double-slit experiment. In its classic version, a coherent light source, for example a laser, illuminates a thin plate containing two open parallel slits. The light passing through the slits causes a series of light and dark bands on a screen behind the thin plate. The brightest bands are at the center, and the bands become dimmer the farther they are from the center. See image below to visually understand this.

The series of light and dark bands on the screen would not occur if light were only a particle. If light consisted of only particles, we would expect to see only two slits of light on the screen, and the two slits of light would replicate the slits in the thin plate. Instead, we see a series of light and dark patterns, with the brightest band of light in the center, and tapering to the dimmest bands of light at either side of the center. This is an interference pattern and suggests that light exhibits the properties of a wave. We know from other experiments—for example, the photoelectric effect (see glossary), which I discussed in my first book, Unraveling the Universe’s Mysteries—that light also exhibits the properties of a particle. Thus, light exhibits both particle- and wavelike properties. This is termed the dual nature of light. This portion of the double-slit experiment simply exhibits the wave nature of light. Perhaps a number of readers have seen this experiment firsthand in a high school science class.

The above double-slit experiment demonstrates only one element of the paradoxical nature of light, the wave properties. The next part of the double-slit experiment continues to puzzle scientists. There are five aspects to the next part.

- Both individual photons of light and individual atoms have been projected at the slits one at a time. This means that one photon or one atom is projected, like a bullet from a gun, toward the slits. Surely, our judgment would suggest that we would only get two slits of light or atoms at the screen behind the slits. However, we still get an interference pattern, a series of light and dark lines, similar to the interference pattern described above. Two inferences are possible:

- The individual photon light acted as a wave and went through both slits, interfering with itself to cause an interference pattern.

- Atoms also exhibit a wave-particle duality, similar to light, and act similarly to the behavior of an individual photon light described (in part a) above.

- Scientists have placed detectors in close proximity to the screen to observe what is happening, and they find something even stranger occurs. The interference pattern disappears, and only two slits of light or atoms appear on the screen. What causes this? Quantum physicists argue that as soon as we attempt to observe the wavefunction of the photon or atom, it collapses. Please note, in quantum mechanics, the wavefunction describes the propagation of the wave associated with any particle or group of particles. When the wavefunction collapses, the photon acts only as a particle.

- If the detector (in number 2 immediately above) stays in place but is turned off (i.e., no observation or recording of data occurs), the interference pattern returns and is observed on the screen. We have no way of explaining how the photons or atoms know the detector is off, but somehow they know. This is part of the puzzling aspect of the double-slit experiment. This also appears to support the arguments of quantum physicists, namely, that observing the wavefunction will cause it to collapse.

- The quantum eraser experiment—Quantum physicists argue the double-slit experiment demonstrates another unusual property of quantum mechanics, namely, an effect termed the quantum eraser experiment. Essentially, it has two parts:

- Detectors record the path of a photon regarding which slit it goes through. As described above, the act of measuring “which path” destroys the interference pattern.

- If the “which path” information is erased, the interference pattern returns. It does not matter in which order the “which path” information is erased. It can be erased before or after the detection of the photons.

This appears to support the wavefunction collapse theory, namely, observing the photon causes its wavefunction to collapse and assume a single value.

If the detector replaces the screen and only views the atoms or photons after they have passed through the slits, once again, the interference pattern vanishes and we get only two slits of light or atoms. How can we explain this? In 1978, American theoretical physicist John Wheeler (1911–2008) proposed that observing the photon or atom after it passes through the slit would ultimately determine if the photon or atom acts like a wave or particle. If you attempt to observe the photon or atom, or in any way collect data regarding either one’s behavior, the interference pattern vanishes, and you only get two slits of photons or atoms. In 1984, Carroll Alley, Oleg Jakubowicz, and William Wickes proved this experimentally at the University of Maryland. This is the “delayed-choice experiment.” Somehow, in measuring the future state of the photon, the results were able to influence their behavior at the slits. In effect, we are twisting the arrow of time, causing the future to influence the past. Numerous additional experiments confirm this result.

Let us pause here and be perfectly clear. Measuring the future state of the photon after it has gone through the slits causes the interference pattern to vanish. Somehow, a measurement in the future is able to reach back into the past and cause the photons to behave differently. In this case, the measurement of the photon causes its wave nature to vanish (i.e., collapse) even after it has gone through the slit. The photon now acts like a particle, not a wave. This paradox is clear evidence that a future action can reach back and change the past.

To date, no quantum mechanical or other explanation has gained widespread acceptance in the scientific community. We are dealing with a time travel paradox that illustrates reverse causality (i.e., effect precedes cause), where the effect of measuring a photon affects its past behavior. This simple high-school-level experiment continues to baffle modern science. Although quantum physicists explain it as wavefunction collapse, the explanation tends not to satisfy many in the scientific community. Irrefutably, the delayed-choice experiments suggest the arrow of time is reversible and the future can influence the past.

This post is based on material from my new book, How to Time Travel, available at Amazon in both paperback and Kindle editions.

Image: Figure 3, from How to Time Travel (2013)