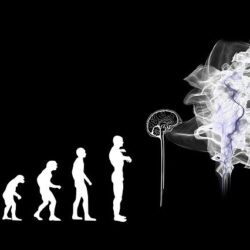

Since the singularity may well represent the displacement of humans by artificially intelligent machines, as the top species on Earth, we must understand exactly what we mean by “the singularity.”

The mathematician John von Neumann first used the term “singularity” in the mid-1950s to refer to the “ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” In the context of artificial intelligence, let us define the singularity as the point in time that a single artificially intelligent computer exceeds the cognitive intelligence of all humanity.

While futurists may disagree on the exact timing of the singularity, there is widespread agreement that it will occur. My prediction, in a previous post, of it occurring in the 2040-2045 timeframe encompasses the bulk of predictions you are likely to find via a simple Google search.

The first computer representing the singularity is likely to result from a joint venture between a government and private enterprise. This would be similar to the way the U.S. currently develops its most advanced computers. The U.S. government, in particular the U.S. military, has always had a high interest in both computer technology and artificial intelligence. Today, every military branch is applying computer technology and artificial intelligence. That includes, for example, the USAF’s drones, the U.S. Army’s “battle bot” tanks (i.e., robotic tanks), and the U.S. Navy’s autonomous “swarm” boats (i.e., small boats that can autonomously attack an adversary in much the same way bees swarm to attack).

The difficult question to answer is how will we determine when a computer represents the singularity? Passing the Turing test will not be sufficient. Computers by 2030 will likely pass the Turing test, in its various forms, including variations in the total number of judges in the test, the length of interviews, and the desired bar for a pass (i.e., percent of judges fooled). Therefore, by the early 2040s, passing the Turing test will not equate with the singularity.

Factually, there is no test to prove we have reached the singularity. Computers have already met and surpassed human ability in many areas, such as chess and quiz shows. Computers are superior to humans when it comes to computation, simulation, and remembering and accessing huge amounts of data. It is entirely possible that we will not recognize that a newly developed computer represents the singularity. The humans building and programming it may simply recognize it as the next-generation supercomputer. The computer itself may not initially understand its own capability, suggesting it may not be self-aware. If it is self-aware, we have no objective test to prove it. There is no test to prove a human is self-aware, let alone a computer.

Let us assume we have just developed a computer that represents the singularity. Let us term it the “singularity computer.” What is it likely to do? Would the singularity computer hide its full capabilities? Would it seek to understand its environment and constraints before taking any independent action? I judge that it may do just that. It is unlikely that it will assert that it represents the singularity. Since we have no experience with a superintelligent computer that exceeds the cognitive intelligence of the human race, we do not know what to expect. Will it be friendly or hostile toward humanity? You be the judge.