Renowned physicist Stephen Hawking is proposing a nanotechnology spacecraft that can travel at a fifth of the speed of light. At that speed, it could reach the nearest star in 20 years and send back images of a suspected “Second Earth” within 5 years. That means if we launched it today, we would have our first look at an Earth-like planet within 25 years.

Hawking proposed a nano-spacecraft, termed “Star Chip,” at the Starmus Festival IV: Life And The Universe, Trondheim, Norway, June 18 – 23, 2017. Hawking told attendees that every time intelligent life evolves it annihilates itself with “war, disease and weapons of mass destruction.” He asserted this as the primary reason why advanced civilizations from another part of the Universe are not contacting Earth and the primary reason we need to leave the Earth. His advocates we colonize a “Second Earth.”

Scientific evidence appears to support Hawking’s claim. The SETI Institute has been listening for evidence of extraterrestrial radio signals, a sign of advanced extraterrestrial life, since 1984. To date, their efforts have been futile. SETI claims, rightly, that the universe is vast, and they are listening to only small sectors, which is much like finding a needle in a haystack. Additional evidence that Hawking may be right about the destructive nature of intelligent life comes from experts surveyed at the 2008 Global Catastrophic Risk Conference at the University of Oxford, whose poll suggested a 19% chance of human extinction by the end of this century, citing the top four most probable causes:

- Molecular nanotechnology weapons – 5% probability

- Super-intelligent AI – 5% probability

- Wars – 4% probability

- Engineered pandemic – 2% probability

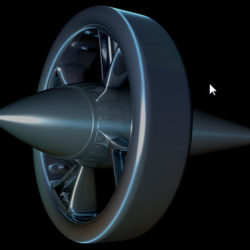

Hawking envisions the nano-spacecraft to be a tiny probe propelled on its journey by a laser beam from Earth, much the same way wind propels sailing vessels. Once it reaches its destination, Hawking asserts, “Once there, the nano craft could image any planets discovered in the system, test for magnetic fields and organic molecules, and send the data back to Earth in another laser beam.”

Would Hawking’s nano-spacecraft work? Based on the research I performed during my career and in preparation for writing my latest book, Nanoweapons: A Growing Threat to Humanity (Potomac Books, 2017), I judge his concept is feasible. However, it would require significant engineering, as well as funding, to move from Hawking’s concept to a working nano-spacecraft, likely billions of dollars and decades of work. However, in Nanoweapons, I described the latest development of bullets that contain nanoelectronic guidance systems that allow the bullets to guide themselves, possibly to shoot an adversary hiding around a corner. Prototypes already exist.

Hawking’s concept is compelling. Propelling a larger conventional spacecraft using a laser would not attain the near light speed necessary to reach a distant planet. Propelling it with rockets would also fall short. According to Einstein’s theory of relativity, a large conventional spacecraft would require close to infinite energy to approach the speed of light. Almost certainly, Hawking proposed a nano-spacecraft for just that reason. Its mass would be small, perhaps measured in milligrams, similar to the weight of a typical household fly.

Hawking’s concept represents a unique application of nanotechnology that could give humanity its first up-close look at an inhabitable planet. What might we see? Perhaps it already harbors advanced intelligent life that chose not to contact Earth, given our hostile nature toward each other. Perhaps it harbors primitive life similar to the beginning of life on Earth. We have no way of knowing without contact.

You may choose to laugh at Hawking’s proposal. However, Hawking is one of the top scientists on Earth and well aware of advances in any branch of science he speaks about. I judge his concerns are well founded and his nano-spacecraft concept deserves serious consideration.